I have commenced the long and tedious task of checking the Acorn adjustments of minimum temperatures at various stations by comparing with the lists of “highly correlated” neighbouring stations that the Bureau of Meteorology has kindly but so belatedly provided. Up to 10 stations are listed for each adjustment date, and presumably are the sites used in the Percentile Matching process.

It is assumed by the Bureau that any climate shifts will show up in all stations in the same (though undefined) region. Therefore, by finding the differences between the target or candidate station’s data and its neighbours, we can test for ‘inhomogeneities’ in the candidate site’s data, as explained in CTR-049, pp. 44-47. Any inhomogeneities will show up as breakpoints when data appears to suddenly rise or fall compared with neighbours. Importantly, we can use this method to test both the raw and adjusted data.

Ideally, a perfect station with perfect neighbours will show zero differences: the average of their differences will be a straight line at zero. Importantly, even if the differences fluctuate, there should be zero trend. Any trend indicates past temperatures appear to be either relatively too warm or too cool at the station being studied. It is not my purpose here to evaluate whether or not individual adjustments are justified, but to check whether the adjusted Acorn dataset compares with neighbours more closely. If so, the trend in differences should be close to zero.

In all cases I used differences in annual minima anomalies from the 1961-1990 mean, or if the overlap was shorter than this period, anomalies from the actual period of overlap. Where I am unable to calculate differences for an Acorn merge or recent adjustment due to absence of suitable overlapping data (e.g. Amberley 1997 and Bourke 1999, 1994), as a further test I have assumed these adjustments are correct and applied them to the raw data.

I have completed analyses for Rutherglen, Amberley, Bourke, Deniliquin, and Williamtown.

The results are startling.

In every case, the average difference between the Acorn adjusted data and the neighbouring comparison stations shows a strongly positive trend, indicating Acorn does not accurately reflect regional climate.

Even when later adjustments are assumed to be correct the same effect is seen.

Interim Conclusion:

Based on differencing Raw and Adjusted data from listed comparison stations at five of the sites that have been discussed by Jennifer Marohasy, Jo Nova, or myself recently, Acorn adjustments to minima have a distinct warming bias. It remains to be seen whether this is a widespread phenomenon.

I will continue analysing using this method for other Acorn sites, including those that are strongly cooled. At those sites I expect to find the opposite: that the differences show a negative trend.

Scroll down for graphs showing the results.

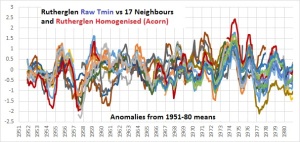

Rutherglen

(Note the Rutherglen raw minus neighbours trend is flat, indicating good regional comparison. Adjustments for discontinuities should maintain this relationship.)

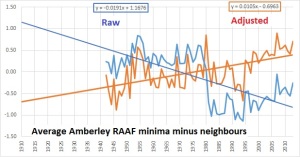

Amberley (a)

(Note that the 1980 discontinuity is plainly obvious but may have been over-corrected.)

Amberley (b): 1997 merge (-0.44) assumed correct

Treating the 1997 adjustment as correct has no effect on the trend in differences.

Bourke (a)

Bourke (b): 1999 and 1994 merges assumed correct.

No change in trend of differences.

Deniliquin

(Note the adjusted differences still show a strong positive trend, but less than the other examples.)

Williamtown

(Applying an adjustment to all years before 1969 produces a strong positive trend in differences.)